Computer vision

The Essential Guide to Zero-Shot Learning [2024]

16 min read

—

Jan 6, 2022

What is Zero-Shot Learning, how does it work, and what computer vison problems can we solve with this approach? Read on to find out and test your knowledge in practice with V7.

Rohit Kundu

Guest Author

Deep Learning has become an indispensable tool of Artificial Intelligence. Among its many applications, it is now commonly used to solve complex Computer Vision tasks through supervised learning.

However—

There are some restrictions for methods under this learning paradigm.

In supervised classification, one needs a large number of labeled training instances (for each class) to train a truly robust model.

In addition, the trained classifier can only classify the instances belonging to classes covered by the training data, and it cannot deal with previously unseen classes.

Furthermore, we may decide to obtain new data incrementally, not all at once.

Pro tip: Do you need to refresh your knowledge? Check out Supervised vs. Unsupervised Learning: What’s the Difference?

Training deep learning models requires a lot of computational power and time, and re-training such models from scratch to include the newly obtained data is often difficult.

This is where the concept of “Zero-Shot Learning” (ZSL) comes to the rescue.

In the next few minutes, we'll explain everything you need to know about Zero-Shot Learning.

Here’s what we’ll cover:

What is Zero-Shot Learning?

How does Zero-Shot Learning work?

How to choose a Zero-Shot Learning method?

Zero-Shot Learning methods evaluation metrics

Challenges in Zero-Shot learning

10 Applications of Zero-Shot Learning

And in case you are looking to build your own computer vision models from scratch—you're in for a treat!

Data labeling

Data labeling platform

Get started today

You can easily find quality data in our Open Datasets repository, label it, and train your AI models using V7. Check out:

What is Zero-Shot Learning?

Correctly categorizing objects from previously unseen classes is a key requirement in any truly autonomous object discovery system.

Zero-Shot Learning (ZSL) is a Machine Learning paradigm where a pre-trained deep learning model is made to generalize on a novel category of samples, i.e., the training and testing set classes are disjoint.

Pro tip: Learn more by reading The Train, Validation, and Test Sets: How to Split Your Machine Learning Data?

For example, a model trained to distinguish between images of cats and dogs is made to identify images of birds. The classes covered by the training instances are referred to as the “seen” classes, while the unlabeled training instances are referred to as the “unseen” classes.

Zero-Shot Learning Process

The general idea of zero-shot learning is to transfer the knowledge already contained in the training instances to the task of testing instance classification. Thus, zero-shot learning is a subfield of transfer learning.

Therefore, Zero-Shot Learning is a subfield of Transfer Learning.

The most common form of Transfer Learning involves fine-tuning a pre-trained model—a problem with the same feature and label spaces. This is called “homogenous transfer learning.”

However, Zero-Shot Learning belongs to “heterogeneous transfer learning”, where the feature and label spaces are disparate.

The Importance of Zero-Shot Learning

Data labeling is a labor-intensive job.

Most of the time invested in any AI project is allotted to data-centric operations. And—

It is especially difficult to obtain annotations, where specialized experts in the field are required to do the job. For example, in developing biomedical datasets, we need the expertise of trained medical professionals, which is expensive.

Pro Tip: If you are looking for qualified annotators, get in touch with our team or check out V7 Data Labeling Services.

What's more, you might be lacking enough training data for each class captured in the conditions that would help the model reflect the real-world scenarios.

For example—

If a new bird species has just been identified, an existing bird classifier needs to generalize on this new species. Perhaps the new species identified is rare and has only a few instances, while the other bird species have thousands of images per class. As a result, your dataset distribution will be imbalanced and, therefore, hindering model performance even in a fully supervised setting.

Methods like unsupervised learning also fail in scenarios where different sub-categories of the same object need to be classified—for instance, trying to identify different breeds of dogs.

Zero-Shot Learning aims to alleviate such problems by performing image classification on the fly on novel data classes (unseen classes) by using the knowledge already learned by the model during its training stage.

Pro Tip: Searching for quality datasets? Check out 65+ Best Free Datasets for Machine Learning.

How does Zero-Shot Learning work?

In Zero-Shot Learning, the data consists of the following:

Seen Classes: These are the data classes that have been used to train the deep learning model.

Unseen Classes: These are the data classes on which the existing deep model needs to generalize. Data from these classes were not used during training.

Auxiliary Information: Since no labeled instances belonging to the unseen classes are available, some auxiliary information is necessary to solve the Zero-Shot Learning problem. Such auxiliary information should contain information about all of the unseen classes, which can be descriptions, semantic information, or word embeddings.

Example of semantic embedding using an attribute vector

On the most basic level, Zero-Shot Learning is a two-stage process involving Training and Inference:

Training: The knowledge about the labeled set of data samples is acquired.

Inference: The knowledge previously acquired is extended, and the auxiliary information provided is used to the new set of classes.

Pro tip: Ready to train your models? Have a look at Mean Average Precision (mAP) Explained: Everything You Need to Know.

According to this research paper, humans can perform Zero-Shot Learning because of their existing language knowledge base (training), which provides a high-level description of a new or unseen class and makes a connection between this unseen class, seen classes, and visual concepts (inference).

In simple words—

Humans can naturally find similarities between data classes, e.g. noticing that both cats and dogs have tails, both walk on four legs, etc.

Zero-Shot Learning also relies on a labeled training set of seen and unseen classes. Both seen and unseen classes are related in a high dimensional vector space, called semantic space, where the knowledge from seen classes can be transferred to unseen classes.

Pro tip: Searching for a free image annotation tool? Have a look at our Complete Guide to CVAT—Pros & Cons.

How to choose a Zero-Shot Learning method?

The two most common approaches used to solve the zero-shot recognition problems are:

Classifier-based methods

Instance-based methods

Let us discuss them in more detail.

Classifier-based methods

Existing classifier-based methods usually take a one-versus-rest solution for training the multiclass zero-shot classifier.

That is, for each unseen class, they train a binary one-versus-rest classifier. Depending on the approach to construct classifiers, we further classify the classifier-based methods into three subcategories.

Correspondence Methods

Correspondence methods aim to construct the classifier for unseen classes via the correspondence between the binary one-versus-rest classifier for each class and its corresponding class prototype.

There is just one corresponding prototype in the semantic space for each class. Thus, this prototype can be regarded as the “representation” of this class.

Meanwhile, in the feature space, for each class, there is a corresponding binary one-versus-rest classifier, which can also be regarded as the “representation” of this class. Correspondence methods aim to learn a correspondence function between these two types of “representations.”

Relationship Methods

Such methods aim to construct a classifier or the unseen classes based on their inter-and intra-class relationships for unseen classes.

In the feature space, binary one-versus-rest classifiers for the seen classes can be learned with the available data. Meanwhile, the relationships among the seen and the unseen classes may be obtained by calculating the relationships among corresponding prototypes.

Relationship methods aim to construct the classifier for the unseen classes through these learned binary seen class classifiers and these class relationships. Meanwhile, the relationships among the seen and the unseen classes may be obtained by calculating the relationships among corresponding prototypes.

Combination Methods

The combination methods describe the idea of constructing the classifier for unseen classes by combining classifiers for basic elements used to constitute the classes.

In combination methods, it is regarded that there is a list of “basic elements” to form the classes. Each data point in the seen and the unseen classes are a combination of these basic elements.

For example, a “dog” class image will have a tail, fur, etc. Embodied in the semantic space, it is regarded that each dimension represents a basic element, and each class prototype denotes the combination of these basic elements for the corresponding class.

Each dimension of the class prototypes takes either a 1 or 0 value, denoting whether a class has the corresponding element. Therefore, methods in this category are mainly suitable for semantic spaces.

Instance-based methods

Instance-based methods aim first to obtain labeled instances for the unseen classes and then, with these instances, to train the zero-shot classifier. Depending on the source of these instances, existing instance-based methods can be classified into three subcategories, which are explained below:

Projection methods

The idea of projection methods is to obtain labeled instances for the unseen classes by projecting both the feature space instances and the semantic space prototypes into a shared space.

There are labeled training instances in the feature space belonging to the seen classes. Meanwhile, there are prototypes of both the seen and the unseen classes in the semantic space. The feature and semantic spaces are real number spaces, with instances and prototypes as vectors in them. In this view, the prototypes can also be regarded as labeled instances. Thus, we labeled instances in two spaces (the feature and semantic spaces).

In projection methods, instances in these two spaces are projected into a common space. In this way, we can obtain labeled instances belonging to the unseen classes.

Instance-borrowing methods

These methods deal with obtaining labeled instances for the unseen classes by borrowing from the training instances.

Instance-borrowing methods are based on the similarities between classes. Let's take object recognition in images as an example—

Suppose we want to build a classifier for the class “truck” but we do not have the corresponding labeled instances. However, we have some labeled instances belonging to classes “car” and “bus.”

They are similar objects to the "truck" and when training classifiers for the class “truck,” we can use instances belonging to these two classes as positive instances. This kind of method follows the way humans recognize given objects and explore the world.

We may have never seen instances belonging to some classes but have seen instances belonging to similar classes. With the knowledge of these similar classes, we can recognize instances belonging to the unseen classes.

Synthesizing Methods

The idea behind synthesizing methods is to obtain labeled instances for the unseen classes by synthesizing pseudo-instances using different strategies.

In some methods, in order to synthesize the pseudo-instances, instances of each class are assumed to follow some kind of distribution. Firstly, the distribution parameters for the unseen classes need to be estimated. Then, instances of unseen classes are synthesized.

Pro tip: Check out The Beginner's Guide to Self-Supervised Learning.

Zero-Shot Learning methods evaluation metrics

We use the average per category top-k accuracy to evaluate zero-shot recognition results.

For an N-class classification problem, classifiers output probability distribution for the test samples. A top-k (k ≤ N) accuracy refers to the scenario when the actual class of the sample (i.e., data label) lies in one of the classes with the “k” highest probabilities predicted by the classifier.

We use the average per category top-k accuracy to evaluate zero-shot recognition results.

For example, for a 5-class classification problem, if the predicted probability distribution is: [0.35, 0.20, 0.15, 0.25, 0.05] for classes 0,1,...,4, then the top-2 classes are class-0 and class-3. If the original data label is either class-0 or class-3, the trained classifier is said to have predicted correctly.

Thus, the following equation calculates the accuracy, determining whether a prediction is correct or not according to the rule mentioned above; “C” denotes the number of unseen classes.

Zero-Shot learning accuracy calculations

Challenges in Zero-Shot Learning

Like every concept, Zero-Shot Learning has its limitations. Here are some of the most common challenges you'll face when applying Zero-Shot Learning in practice.

Bias

During the training phase, the model has access only to the data and labels of the seen classes. This biases the model towards predicting unseen data samples during the test time as one of the seen classes. In cases where, during the test time, the model is evaluated on samples from both seen and unseen classes, the bias problem becomes more prominent.

Domain shift

Zero-Shot Learning models are developed primarily to extend a pre-trained model to novel classes when data from these become incrementally available— for example, adapting a classifier initially trained to distinguish between dogs and cats using supervised learning to classify birds on the fly.

Thus, the “domain shift” problem is commonplace in Zero-Shot Learning. Domain shift results when the statistical distribution of the data in the training set (seen classes) and the testing set (which may be samples from the seen or unseen classes) are significantly different.

Hubness

The hubness problem is related to the curse of dimensionality associated with the nearest neighbor search. Some points, called hubs, frequently occur in the k-nearest neighbor set of other points in high-dimensional data.

Hubness

In Zero-Shot Learning, the hubness problems occur for two reasons.

Firstly, both input and semantic features reside in a high-dimensional space. When such a high dimensional vector is projected into a low dimensional space, the variance is reduced, resulting in mapped points being clustered as a hub.

Secondly, ridge regression, widely used in Zero-Shot Learning, induces hubness. As a result, it causes a bias in the predictions, with only a few classes predicted most of the time regardless of the query.

The points in these hubs tend to be close to the semantic attribute vectors of many classes. Since we use the nearest neighbor search in semantic space during the testing phase, hubness leads to the deterioration in performance.

Semantic loss

While training on the seen classes, the model learns only the important attributes for distinguishing between these seen classes. But, some latent information may be present in the seen classes, which are not learned if they don’t contribute significantly to the decision-making process. However, this information might be important in the testing phase on the unseen classes. This is what we call semantic loss.

For example, a cat/dog classifier will focus on attributes like facial appearance, body structure, etc. The fact that both are four-legged animals is not a distinguishing attribute. However, this may be an essential deciding factor if the unseen class is “humans” during the test time.

10 Applications of Zero-Shot Learning

Finally, let's have a look at some of the most prominent Zero-Shot Learning applications.

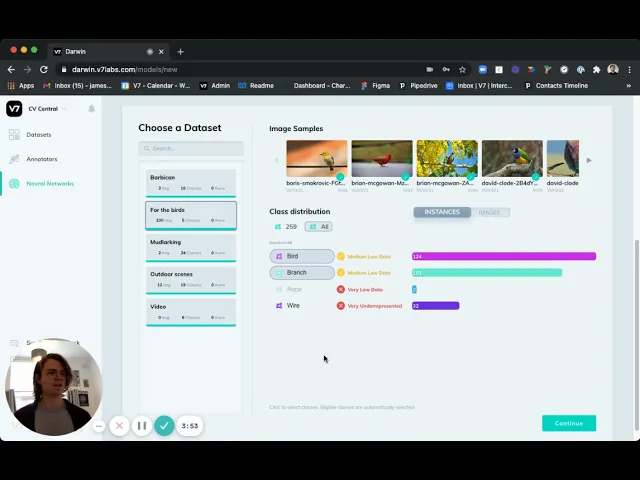

Image classification

Birds image classification on V7

Example: Visual Search Engines

Such systems get a visual input (like images) and search for information on the World Wide Web. Search Engines can be trained on thousands of classes of images, but still, novel objects may be supplied by users. Thus, a Zero-Shot Learning framework is helpful for such scenarios.

Semantic segmentation

Example: COVID-19 Chest X-Ray Diagnosis

The COVID-19 infection is characterized by white ground-glass opacities in the lungs of patients, which are captured by radiological images of the chest (X-Rays or CT-Scans). Segmenting the lung lobes out of the complete image can aid in the diagnosis of COVID-19. However, labeled segmented images of such cases are scarce, and thus a zero-shot semantic segmentation framework can aid in this problem.

V7 lung annotation

Pro tip: Curious about medical image annotation? Check out How to Label Medical Images for Machine Learning.

Image generation

Example: Text/Sketch-to-Image Generation

Several deep learning frameworks generate real photos using only text or sketch inputs. Such models frequently deal with previously unseen classes of data. A Zero-Shot text-to-image generator framework is devised in this paper, and a sketch-to-image generator is developed in this paper.

Pro tip: Check out Neural Style Transfer: Everything You Need to Know [Guide].

Object detection

Example: Autonomous vehicles

There is a need for detecting and classifying objects on the fly in autonomous navigation applications to decide what actions to take.

Bounding box annotations for object detection on V7

For example, seeing a car/truck/bus means it should avoid them, a red traffic light means to stop before the stop line, etc.

Detecting novel objects and knowing how to respond to them is essential in such cases, and thus a Zero-Shot backbone framework is helpful.

Pro Tip: Read The Ultimate Guide to Object Detection and YOLO Object Detection.

Image Retrieval

Image Retrieval using Zero-Shot learning

Example: Sketch-based Image Retrieval (SBIR)

This refers to the Zero-Shot classification of real images belonging to novel classes by using sketches as the only semantic inputs. This is a popular area of research.

Natural Language Processing

Example: Text classification

Classification of texts into topics, emotion recognition using Zero-Shot Learning is a popular area of research.

Make sure to also check out Optical Character Recognition: What is It and How Does it Work [Guide].

Action Recognition

Action recognition is the task of recognizing the sequence of actions from the frames of a video. However, Zero-Shot Learning can be a solution if the new actions are not available when training.

Computer vision-powered customer action recognition on V7

Example: Human-object Interaction Recognition

Style Transfer

Example: Artistic Style Transfer

Style Transfer in an image is the problem of transferring the texture of the source image to the target image while the style is not pre-determined and arbitrary.

Pro tip: Read more Neural Style Transfer: Everything You Need to Know [Guide].

Resolution Enhancement

Resolution enhancement using Zero-Shot Learning

Example: Single-Image Super-Resolution

Zero-Shot resolution enhancement problem aims at enhancing the resolution of an image without pre-defined high-resolution images for training examples.

Audio Processing

Example: Voice Conversion

Zero-Shot-based voice conversion of one speaker to another speaker’s voice is a recent field of research.

Zero-Shot Learning: Key Takeaways

Zero-Shot Learning is a Machine Learning paradigm where a pre-trained model is used to evaluate test data of classes that have not been used during training. That is, a model needs to extend to new categories without any prior semantic information.

Such learning frameworks alleviate the need for retraining models.

Furthermore, we do not need to worry about the class imbalance of datasets. It has been applied to several domains of Computer Vision like image classification and image segmentation, object detection and object tracking, NLP, etc.

However, there are some problems associated with the Zero-Shot Learning frameworks as well, and thus the domain is still undergoing research to improve its capabilities.

Read more:

13 Best Image Annotation Tools

Overfitting vs. Underfitting: What's the Difference?

What is Overfitting in Deep Learning and How to Avoid It

The Complete Guide to Panoptic Segmentation [+V7 Tutorial]

The Definitive Guide to Instance Segmentation [+V7 Tutorial]

The Beginner's Guide to Deep Reinforcement Learning

9 Reinforcement Learning Real-Life Applications

Mean Average Precision (mAP) Explained: Everything You Need to Know

The Complete Guide to Ensemble Learning

The Ultimate Guide to Semi-Supervised Learning

Deep Learning for Image Super-Resolution [incl. Architectures]