LLMs

19 min read

—

May 19, 2025

Discover the top business use cases for LLMs, from document processing and financial analysis to AI agents automating complex workflows.

Rachel Heller

Content Writer

What’s the most surprising way you’ve used an LLM?

Wait – don’t answer that.

The extreme, bizarre, and – let’s be honest – hilarious ways people are chatting with GPTs is nothing compared to what large language models (LLMs) can do when you put them to work.

Picture this:

A financial team that once spent days analyzing quarterly reports now processes them in minutes.

A legal department that needed weeks for a contract review now completes a thorough analysis in a couple of hours.

A marketing team that struggled with content calendars now produces entire strategic campaigns in less than a day.

Welcome to 2026, where large language models (LLMs) have moved from experimentation to essential business infrastructure, upending what used to be tried-tested-and-true ways of getting things done across every industry.

And that’s what this article is all about.

What LLMs are good at (and not as good at) for organizations?

What business teams are using LLMs for, and top use cases that apply to real job functions and tasks.

Solving business problems with LLMs

Let’s jump in.

So, what are LLMs good at – and not so good at?

If it seems that LLMs are everywhere these days, there's a good reason for that.

In a very short amount of time, popular LLMs like Chat GPT, Claude, Gemini , and open-source models like LLaMA, Deepseek, and Mistral have become household names.

LLMs are behind all kinds of useful (and not so useful) applications. They are massive pattern-recognition engines that have, in effect, read the whole internet – billions of books, articles, websites, code repositories, business reports, legal filings, technical manuals and industry publications, and more! – allowing them to predict what words should come next in any given context. They use this knowledge and capability to generate natural language responses to questions and prompts.

And this has proven to be incredibly useful for a whole range of tasks, like generating compelling content from blog posts to poetry, summarizing long-from content, answering questions by synthesizing information, and brainstorming ideas.

But the business case for LLMs extends further, jumping off from LLMs extraordinary versatility, organizations are using LLMs to do things like:

Turn unstructured data into business intelligence

Automate document processing

Extract key metrics and insights from massive text repositories

Analyze customer sentiment across thousands of interactions simultaneously

Translate technical documents

Write and debug code across programming languages

Produce first drafts of everything from financial reports to marketing copy

Synthesize research findings from many sources

Unlike traditional enterprise software requiring specific programming for each workflow, LLMs can shift between diverse business tasks through simple prompting.

An example of a business AI tool that handles specialized tasks through a chat interface (V7 Go and Concierge)

LLMs are embedded throughout today's business landscape – they're powering automated document processing systems, enhancing business intelligence platforms, speeding content operations, and rewriting how companies interact with customers.

Popular enterprise-grade LLMs like GPT-5 (powering many business intelligence systems), Claude 4.5, Gemini 3, and open-source models like LLaMA, Deepseek, and Mistral have become standard components in modern business tech stacks.

Each offers its own quirks and specialties: GPT-5 is a good option for general business applications, Claude demonstrates nuanced reasoning for complex decision support, Gemini integrates with Google's enterprise ecosystem, while open-source models provide customization flexibility to support specific business processes.

Current limitations: Not quite your digital CFO (yet)

Despite their impressive capabilities, LLMs certainly aren't flawless. They struggle with surprisingly basic tasks and have important limitations:

Counting things accurately: Ask an LLM to count the words in a paragraph or characters in a sentence, and you might get an approximation rather than precision. They process text as "tokens" (word fragments) rather than seeing text as we do.

This means organizations must watch out for data accuracy. For example, when extracting financial figures or compliance requirements, LLMs may occasionally misinterpret numbers or provide imprecise data extractions – making verification processes essential for critical business applications.

Mathematical reasoning: While they can handle simple calculations, complex math often trips them up.

That means being prepared for inconsistent quantitative analyses. While they can describe financial trends, complex calculations often require specialized tools. An LLM might confidently present ROI projections that sound plausible but contain computational errors.Following strict patterns: Tasks like maintaining specific rhyming schemes or syllable counts in poetry can be challenging. They understand meaning better than form.

Because LLMs grasp business concepts better than they maintain rigid document formats, tasks that need to strictly follow regulatory formats or industry-specific templates may need additional guardrails.Hallucinations: Perhaps their most infamous flaw – LLMs occasionally generate information that sounds believable but is entirely fabricated.

This lack of factual reliability is concerning for business applications – they might reference nonexistent industry regulations or invent statistics with complete confidence.

These limitations stem from several factors: the statistical nature of LLM’s training (optimized for likelihood rather than accuracy), limited context windows (they can't "see" all information at once), and their fundamental design based on semantic patterns rather than literal interpretation.

Safety concerns: Trust but verify / The truth, the whole truth, and nothing but...?

Beyond technical limitations, LLMs raise important considerations around accuracy, completeness, and bias. They can reflect and sometimes amplify biases that exist in the underlying data they were trained on. And their outputs might sound authoritative even when incorrect, creating risks when used for strategic and/or critical decisions.

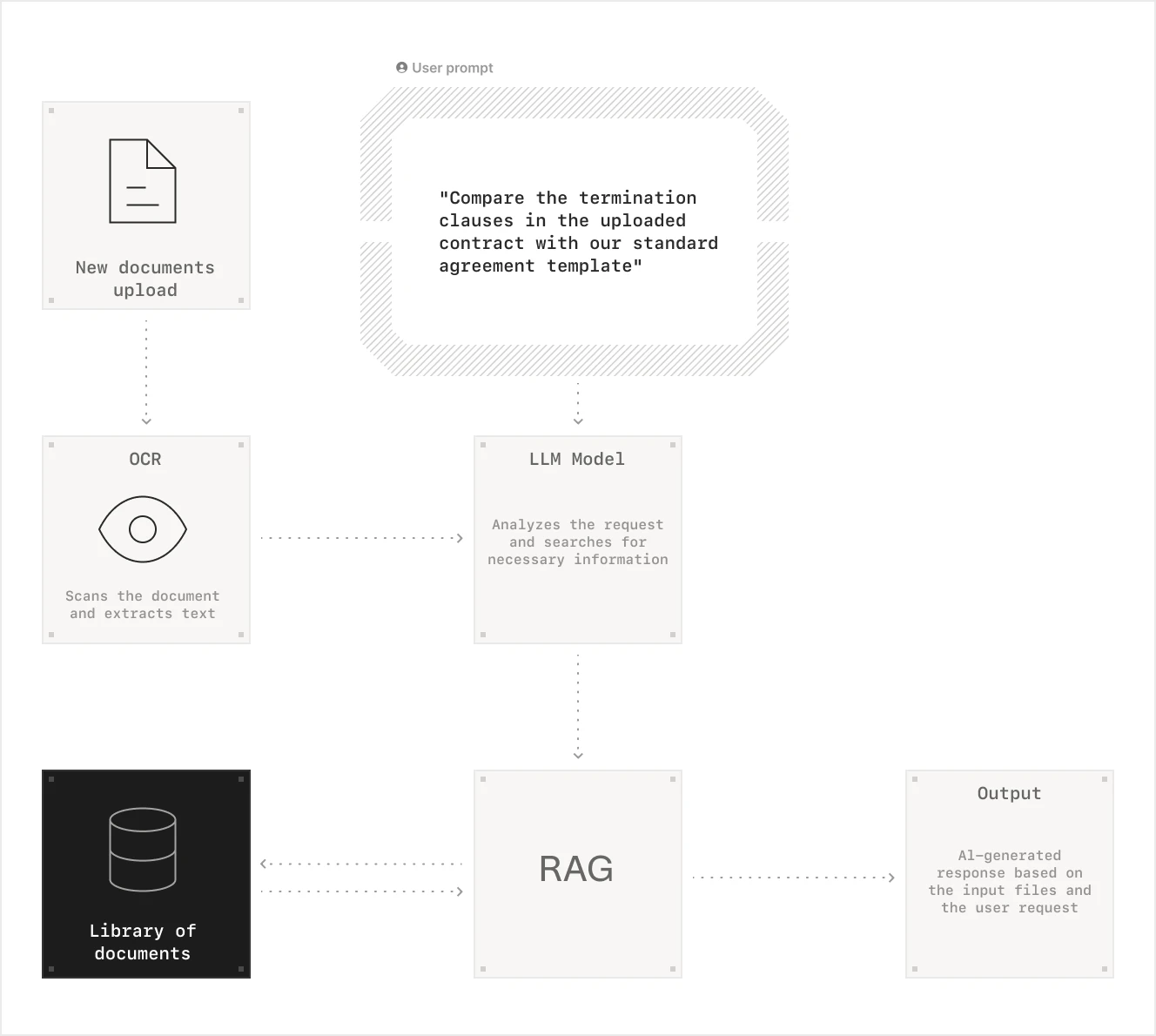

Given the huge potential value of LLMs, approaches and developments that address these challenges are proliferating and maturing. Techniques like retrieval-augmented generation (RAG) ground LLM outputs in verified information sources. Expanded context windows (now reaching millions of tokens) allow models to "see" more information at once. And fine-tuning helps models get hyper-accurate at specific tasks.

RAG grounds LLM responses in verified knowledge bases, enhancing accuracy.

Retrieval-augmented generation (RAG): Rather than relying on the model's training alone, RAG works by retrieving relevant documents from a trusted knowledge base before generating responses to ground answers in verified company data, reduce hallucinations, and provide citations to source material.

Expanded context windows: Many models can now process much longer inputs (millions of tokens) at once which sets them up to better comprehend complex documents, reduce information loss over the course of the interaction or process, and also support more coherent long-form outputs like financial reports.

Specialized fine-tuning: Fine-tuning is about training models on domain-specific datasets to improve performance and accuracy for specific applications like medical diagnostics or financial analysis.

Prompting techniques: How an LLM is prompted can have a significant impact on accuracy. That is, expecting high-accuracy output from a first-try (known as zero-shot) prompt is similar to relying on Google’s old “I’m feeling lucky” search option. Applying prompt techniques like chain-of-thought, prompt chaining, and self-reflection, can extend model reasoning over several iterative steps. Explore our prompt engineering guide for more details.

Constitutional AI: This approach applies explicit rules and principles ("constitutions") that guide LLM responses with the aim of reducing harmful, biased, or inappropriate outputs.

Red-teaming and adversarial testing: Just like its name implies, this is about deliberately attempting to make models produce problematic outputs to identify and fix vulnerabilities.

Human-in-the-loop (HITL) systems: Also living up to its name, HITL is when humans can review, correct, and approve AI outputs before they're finalized. Combining AI with human oversight is a natural way to make sure AI system output is appropriate. Learn more about reinforcement learning from human feedback (RLHF).

And there are more approaches, too, including model cards and transparency documentation, to make model capabilities, limitations, and known biases available upfront. For auditing deployed AI systems, bias measurement and mitigation tools can detect and quantify bias in model outputs to help identify emerging issues.

Each of these approaches addresses different aspects of bias and risk challenges. The most robust solutions typically combine multiple techniques appropriate to the specific business context and use case. And they use multiple models together. Several specialized models, or simply playing tasks to the strengths of particular models, is valuable for boosting performance and reducing overall error.

Or, even better, combine them within an AI platform

Like a young and talented intern, on their own, or even working in tandem, LLMs can impress but still require a lot of supervision. And that can quickly get unmanageable.

LLMs deliver maximum value when integrated and orchestrated within workflow automation platforms. In these contexts, LLMs are connected to verified business data, and operate within defined governance frameworks. This secure scaffolding makes it possible for LLMs to reliably automate routine workflows as well as help with complex processes.

Which is when the real fun can begin. No joke – there’s an absolute ocean of opportunities for applying LLMs just waiting for you to jump in. Nestea plunge, here we come!

11 best large language models applications

If it’s hard to imagine what LLMs can help with in a business context, don’t worry – ChatGPT’s splash radius has overshadowed the quiet value being generated by LLMs at work. While popular consumer-facing LLM products continue generating dad jokes and helping with homework, enterprise applications of LLMs are steadily remaking the way entire business functions operate.

Like what? Here’s a top-level list of the eleven best business process applications of LLMs in 2025, plus three up-and-coming applications to keep your eye on.

1. AI agents for work automation

In 2026, LLMs are the new hires that never sleep, never complain, and master workflows in seconds.

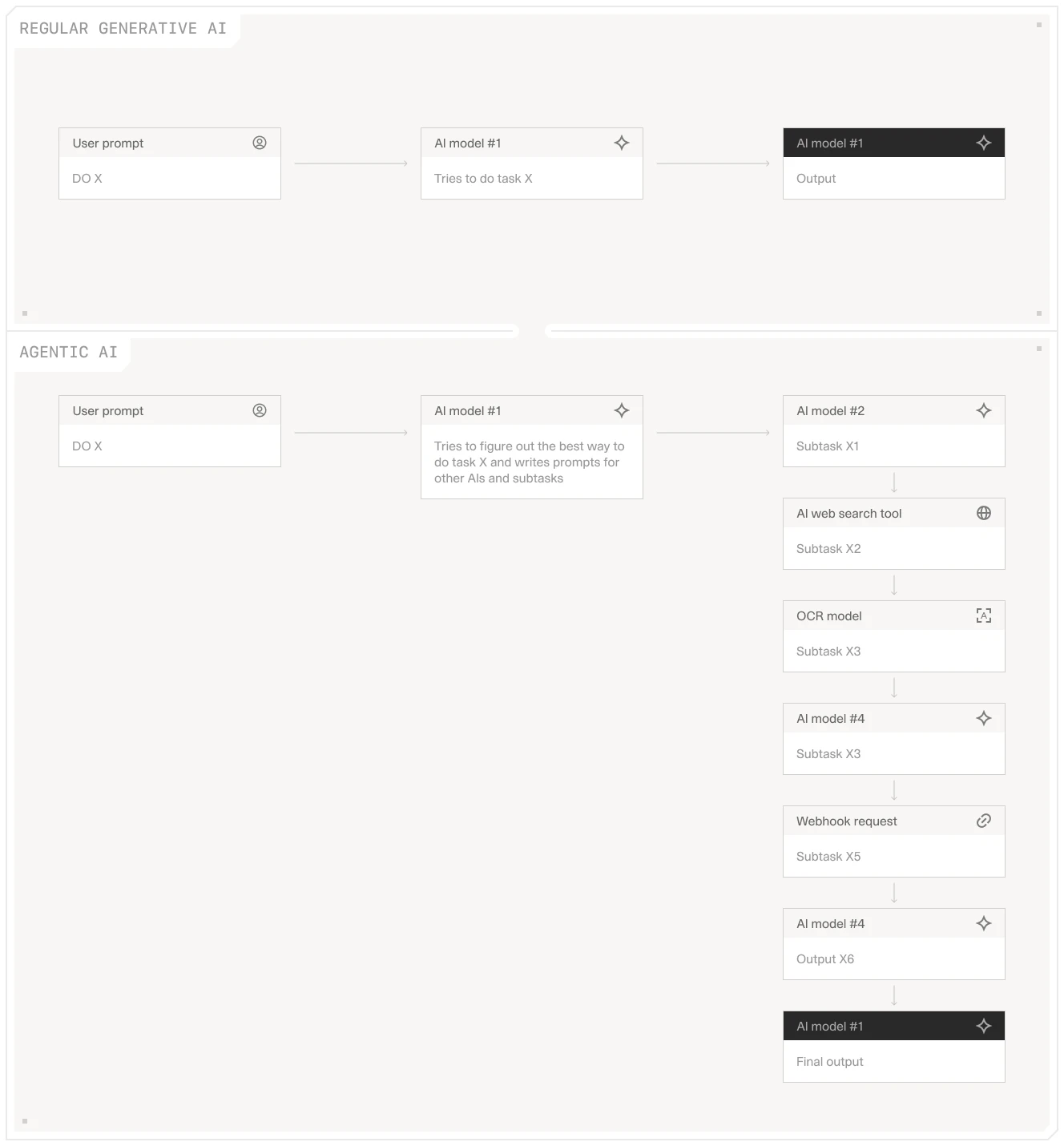

AI agents—coordinated LLMs equipped to interact with software systems—are the productivity multipliers businesses have dreamed about for decades. These are not the same thing as chatbots. And they aren’t just AI assistants, either.

The difference is autonomy. AI agents don't just answer questions, they take initiative to complete entire workflows.

AI agents can do things like:

Navigate multiple applications at the same time

Generate code for API integrations

Write prompts for other LLMs to create specialized content

Access databases, update CRMs, and synthesize data across systems

The most advanced implementations connect LLMs to work across your entire technology stack.

Agentic AI breaks down complex tasks into subtasks handled by specialized models and tools.

What does that look like?

When a client emails asking for a project update, an AI agent can extract the request, check project management software, pull data from relevant dashboards, generate a summary report, and write a response in seconds.

Insurance companies deploy agents that process claims by extracting information from submitted documents, cross-checking policy coverage, calculating payout amounts, and drafting response emails—all without human intervention for routine cases.

In real estate, agents parse listing data, analyze market trends, triage offering memorandums, and automatically generate property descriptions that highlight the most compelling features for specific buyer demographics.

Another application example is in venture capital. AI agents can automate the legwork of evaluating investing pitches. They can import company data and analyze key metrics like revenue growth, competitors, market size, and financials, so that analysts can spend their time vetting investment opportunities.

2. Document data extraction

Unstructured data is a goldmine, and every organization is sitting on mountains of unstructured data in documents. Getting it out and into the light of day used to be slow and require multiple steps, even with intelligent document processing solutions to make things easier. Now, LLMs can unearth valuable information buried in business documents at high speed.

What makes these extraction capabilities particularly powerful is their contextual understanding. LLMs can identify relevant information even when formats change or when the information is implied rather than explicitly stated.

Put another way, LLMs can extract not just what's on the page, but what it means, while maintaining context across hundreds of pages.

LLMs can extract specific fields like vendor name, total amount, and currency from invoices.

Use LLMs to:

Extract specific data points from inconsistently formatted documents

Identify relationships between entities mentioned across multiple documents

Transform unstructured text into structured databases

Financial institutions use LLMs to extract key data from documents–from receipts to SEC filings–with pinpoint accuracy, processing in minutes what used to take hours of analyst time.

Legal teams deploy them to find clauses in contracts, pull precedents from case law, identifying relevant statutes and rulings from libraries of legal texts.

Insurance companies use LLMs to extract policy details, damage descriptions, and coverage limitations from submitted documentation. Explore some of the best data extraction tools powered by AI.

3. Web intelligence (way beyond basic search)

With LLMs to do the heavy lifting, automating web data collection – crawling, collecting, and interpreting web content – can go far beyond simple search. The best AI search applications combine web search with analysis.

LLMs act as information synthesis engines that can:

Monitor competitor websites for product changes and pricing updates

Track regulatory changes across government sites

Aggregate customer reviews and social media sentiment

Compile market research into cohesive reports

Pulling this kind of web intelligence into workflow automations means processes run with the most up-to-date information. For example, retailers use LLMs to track pricing trends across ecommerce platforms, then automatically adjust pricing strategies with AI agents based on the web data intelligence.

But even on its own, LLM-powered web search and scraping can speed workflows. For example, marketing teams generate competitive intelligence reports that would otherwise take days of manual research. And real estate analysts and property investors can generate detailed property performance and market analysis reports in minutes.

4. Financial analysis

In finance, where information advantage translates directly to profit margins, LLM-driven analysis is now invaluable. The LLM does the grunt work of processing earnings calls, financial statements, and market news, so analysts can have everything they need to make decisions.

Today's LLM-powered financial applications can:

Generate comprehensive investment memos from company filings

Compare quarterly reports across multiple periods to identify trends

Extract and normalize financial metrics from inconsistently formatted statements

Analyze merger documents to flag potential regulatory concerns

Venture capital firms now use LLMs to process startup pitch decks and financials, generating preliminary investment analyses that highlight red flags and opportunities. Investment banks deploy them to analyze M&A data rooms, processing thousands of documents to identify potential synergies and integration challenges.

The real power comes from combining extraction with analysis. LLMs don't just pull numbers—they contextualize them, comparing performance against industry benchmarks and flagging anomalies that might indicate opportunities or risks.

5. Document processing

Every business drowns in documents. Invoices, receipts, contracts, forms – the list goes on. Document automation systems have made a huge dent in all the paperwork, and with LLMs looped in they are able to do even more.

What makes LLM-based document processing so valuable is its flexibility. When there are exceptions, LLM-based systems don't simply error out—they reason through the problem.

Traditional document processing breaks down when formats change. LLMs instead recognize these changes as exceptions, and apply reasoning to resolve the issues, or raise them for human review. And that effectively eliminates the backlogs that used to build up from systems bumping into unusual document or data formats.

LLM-based systems offer flexible document processing adaptable to various formats and exceptions.

LLM’s flexibility and contextual understanding makes it possible to classify incoming documents by type and urgency, route documents to the right person or department, and extract information to enter into databases while flagging inconsistencies or missing information. It can also generate response documents.

What does this look like in practice? In insurance, flexible document processing systems can work with wildly different claims documentation—from formal submissions to smartphone photos with handwritten notes—extracting damage descriptions, policy numbers, and claimant information while maintaining accuracy. Real estate companies process everything from standardized lease applications to property inspection reports with non-standard annotations.

LLMs are used for data extraction, document classification, and many other scenarios

6. Legal research and contract reviews

Even with an army of junior associates, researching case law, drafting standard legal documents, and reviewing contract terms still takes time.

Law firms use LLMs to conduct preliminary research, identifying relevant cases and statutes in minutes rather than hours. Corporate legal departments deploy them to review vendor contracts, flagging non-standard terms and suggesting modifications.

Specialized applications combine research with drafting. When negotiating contracts, legal teams use LLMs to identify comparable terms from previous agreements, then generate suggested language that aligns with company standards for the case at hand. See our guide on AI contract review for more examples.

7. Visual understanding: Image captioning

Integrating LLMs with computer vision models creates powerful multimodal systems that can understand, describe, and analyze visual content. This powers much faster data labeling, which is the lynchpin for creating the data necessary to train specialized AI models – accelerating the model development process.

These multimodal systems can be applied to:

Generate detailed image captions for accessibility and SEO

Automatically label images and video frames for AI training

Extract text and data from visual documents

Identify objects, scenes, and activities in visual content

LLMs can caption images and video frames in seconds. While this data still requires human review, getting pre-labeling done so quickly is a valuable shortcut.

Applications outside of AI development include real estate, where these systems automatically generate rich descriptions from property photos, highlighting architectural features, room dimensions, and aesthetic qualities. And insurance, where companies use them to analyze damage photos from claims, matching visual evidence against policy coverage.

8. Customer support

Customer support is on the frontlines of the LLM revolution. Integrating LLMs into customer service systems and processes has moved the industry far beyond simple FAQ chatbots.

LLM-powered customer intelligence systems do everything from managing complex service requests across multiple systems, to personalizing recommendations and responses based on customer history/preferences. LLMs are the brains behind always-on customer service agents.

Behind the scenes, they support proactively identifying and addressing potential issues and escalating to humans when needed.

Financial institutions use these systems to handle account inquiries, transaction disputes, and even preliminary loan applications. Ecommerce companies deploy them to manage order issues, product questions, and return requests.

Integration with backend systems extends the capabilities of LLMs. When a customer asks about an order status they access order management systems, delivery tracking, and customer records to provide specific, accurate answers.

9. Content creation

Content creation is perhaps the most familiar application of LLMs, since they are, after all, built on content themselves.

Top business applications of LLMs for creating content:

Specialized content like articles and even technical documentation

Content tailored to different audience segments or specific business objectives

Consistent cross-platform content strategies

Marketing teams use LLMs to generate email campaigns that adjust messaging based on customer segments, including dynamically updating based on interaction data. Product teams deploy them to create documentation that updates itself when product features change.

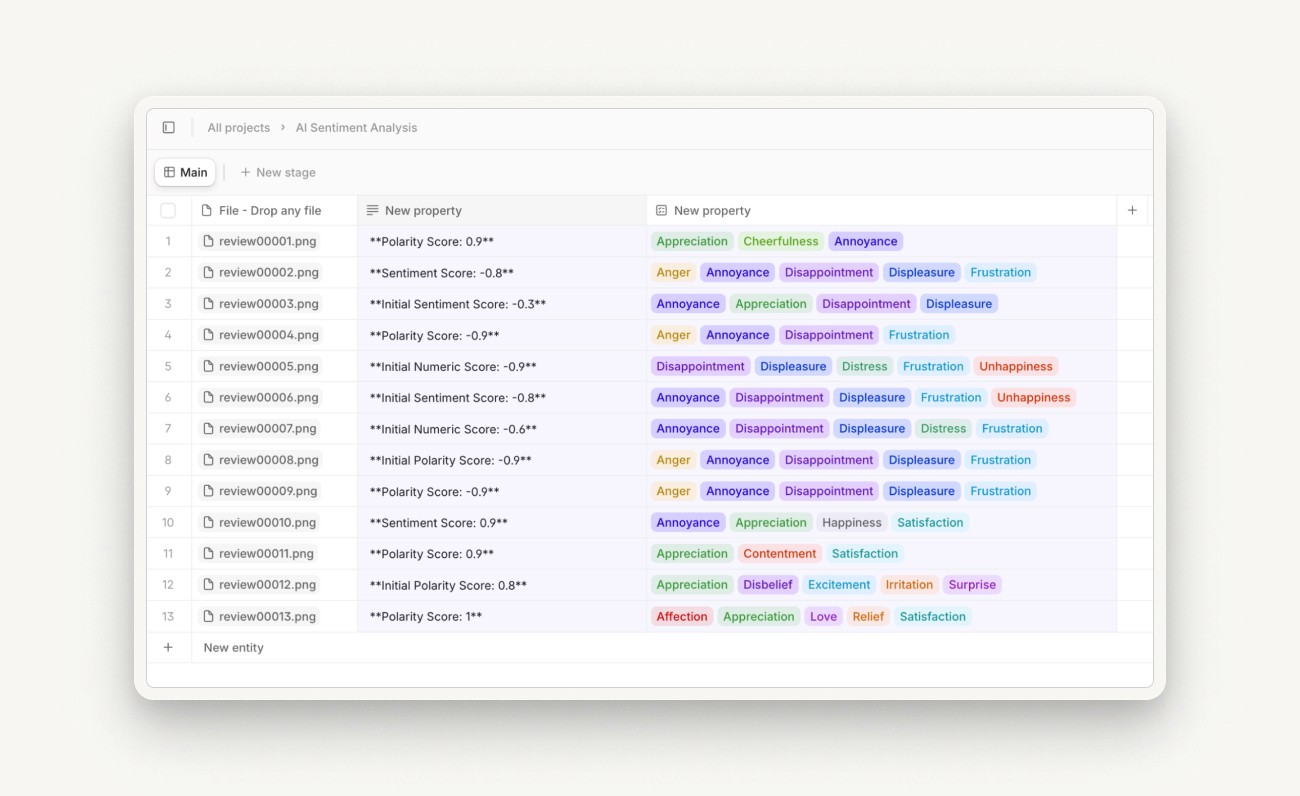

10. Content understanding at scale

Making sense of content across media formats is sometimes more art than science. The tone and context of content is often the key to correctly understanding its meaning.

LLMs are the new go-tos for automating this analysis to deliver content intelligence and moderate user-generated content platforms.

Paired with audio transcription capabilities, LLMs can generate summaries of video and audio content as well as assess its sentiment and tone. This is particularly useful for use cases involving customer communications, such as sales and customer support.

Sales teams can build searchable libraries of customer calls with key points highlighted, for better knowledge sharing and onboarding.

Customer experience teams can apply LLMs to analyze support interactions.

Marketing departments can track brand sentiment across social media platforms.

LLMs make it possible to access the information – and surface the insights – within content, conversations, and communications at scale. Learn more about AI sentiment analysis.

11. Meeting summarization

Meetings used to be where productivity went to die, because what happens in business meetings, which take place most often via video conferencing, tends to stay in the meeting – meaning ideas, action items, and important updates get left behind once the call is over.

LLMs are changing that. Integrated within meeting platforms, or used to analyze recordings, LLMs can summarize, itemize, and capture decisions and action items.

LLM-based meeting tools can generate real-time transcripts and summaries, identify key decisions and action items, create and assign follow-up tasks, and even connect meeting outcomes to project management systems.

Applied to project management, and multi-discussion strategic initiatives, LLM-driven meeting intelligence can make the difference between successful implementations and missed objectives.

Another way LLMs support meetings is through integration with voice assistants that can retrieve information during meetings. When someone asks, "What were our Q1 results for the enterprise segment?" the system can find and present the data in real time.

3 next applications of LLMs in business

Organizations gaining the most value from LLMs aren't treating them as isolated tools, but as core components rather than an add-on. With this mindset, LLMs are being integrated throughout operations using secure AI workflow automation platforms to build what you might call "LLM-native" processes—workflows designed from the ground up with AI.

Combining and integrating capabilities is leading the way to new applications of LLMs. Three of the most exciting are:

Multimodal reasoning: Combining text, image, and eventually video understanding into unified systems that can reason across formats much as humans do. The value in settings like healthcare that involve many document types for a single case – patient medical images (X-rays, MRIs, CT scans), clinical notes, lab test results – is immense.

Collaborative AI agents: Multiple specialized agents working together in teams, with different LLMs handling different aspects of complex workflows based on their particular strengths. These are already becoming a reality with multi-agent systems. Their applicability is endless, but one example might be in finance to manage reporting:

A data analysis agent processes financial metrics and market trends

A regulatory compliance agent checks all content meets SEC requirements

A narrative generation agent writes explanations to link data to business outcomes

A visualization agent produces charts and graphics

An editor agent reviews the entire report for consistency and clarity

Autonomous data discovery: LLMs proactively exploring available information sources, surfacing insights without being driven by a specific human request. For example, retail inventory management. The LLM-based system would monitor many relevant data sources at the same time – inventory levels, supplier delivery patterns/disruptions, competitor behavior/pricing, and even weather forecasts related to shipping routes and social media to glean possible trends impacting demand. With all of this continuous data the LLM assesses and predicts inventory levels and needs.

These paint a pretty optimistic picture of the future of LLMs for business applications. Is that future very close? That depends on how you implement LLMs.

How good are LLMs at solving business problems?

The common thread through all of the above applications is that LLMs aren't stand-alone chat interfaces; they're integrated systems where LLMs serve as cognitive infrastructure embedded within workflow automation platforms.

That’s the key to harnessing LLMs to solve business problems. And it’s a distinction that matters – a lot.

To put it another way, what separates leading organizations from the rest isn't access to LLM technology—it's how they orchestrate that technology within operations. And successful implementations usually have a set of key features in common:

Multi-model orchestration: Rather than relying on a single LLM for all tasks, AI automation systems route work to models based on their strengths. Financial calculations might go to Deepseek, while customer communications flow through Claude. This specialization drives exponentially more accurate, reliable outcomes compared to what any single model can deliver.

System integration: LLMs are connected to the full technology stack, with secure access to databases, internal knowledge bases, and operational systems—allowing them to both access information and get work done.

Human-in-the-loop: The most effective systems are designed to work in tandem with people, increasing speed and process effectiveness autonomously, while seeking input on exceptions and decisions that need human judgment.

Continuous learning: Effective implementations capture feedback on LLM outputs, using this data to refine prompts, adjust routing rules, and improve performance. Orchestrated AI systems evolve over time, becoming increasingly valuable business assets.

In this context, multi-agent systems stand to move to the forefront of LLM applications, becoming adaptive intelligence networks that reconfigure themselves based on the conditions at hand.

This next evolution brings a level of self-awareness to agentic systems, where they review their own architecture and capabilities to suggest/request new components or modifications. It’s a big move: AI stops being a reactive tool and becomes a proactive business partner capable of independent reasoning, coordination, and execution.

The magic isn't in the model – it's in how you integrate it into your operations.

The bottom line here is that organizations getting the most from LLMs aren’t using a single, powerful magic-bullet model. They're solving business problems by effectively integrating LLMs to capitalize on their capabilities across operations and amplify the strengths of human teams.

The true breakthrough is the orchestration layer that transforms LLMs from productivity sidekicks into business-critical infrastructure that drives measurable outcomes.

V7 Labs' AI orchestration platform supports many of the capabilities discussed in this piece. To explore more use case ideas for LLMs, visit v7labs.com or contact our solutions team.

With more than a decade of experience in cloud and AI, Rachel is a product marketing veteran specializing in connecting technology with real-world impact. A recognized thought leader in emerging technologies, Rachel writes extensively about work tech, applied AI, Intelligent Document Processing (IDP), and agentic automation.