AI implementation

9 min read

—

Feb 18, 2026

The release of Chat GPT in 2022 was a genuinely transformational moment in AI. Still, in 2026, what are the most powerful alternatives?

Imogen Jones

Content Writer

For many teams, ChatGPT has become the default interface to AI. It’s fast, fluent, and remarkably capable. In just a few years, it has reshaped expectations of what software should feel like. People now increasingly expect systems to understand context, respond in plain English, and adapt naturally to follow-up questions, without rigid menus, forms, or dropdowns.

But as organizations move beyond experimentation, a more practical question starts to surface:

Is ChatGPT the right tool for this specific task?

If you're searching for “ChatGPT alternatives” in 2026, the cause is only sometimes dissatisfaction with ChatGPT itself. More often, it’s about fit. That means fit for stricter security requirements, for structured workflow automation, for domain-specific reasoning, for scale, and most importantly, for automating entire processes rather than answering one prompt at a time.

In this article, we’ll explore:

The story and core capabilities of ChatGPT

The strengths and trade-offs of other leading LLMs

AI platforms that move beyond chat, and bring full workflows to life

What Is ChatGPT?

ChatGPT is OpenAI’s conversational interface built on top of its GPT family of large language models (LLMs). An LLM is a neural network trained on vast amounts of text data to predict the next word in a sequence. While that sounds simple, this probabilistic prediction mechanism enables models to generate essays, summarize complex documents, write code, translate languages, extract structured information, and reason across long passages of text.

OpenAI was founded in 2015 with the goal of developing safe artificial general intelligence. After early research iterations, ChatGPT launched publicly in November 2022.

The response was genuinely unprecedented. It became the fastest-growing consumer application in history, gaining more than 100 million users in two months. As of 2026, it's one of the five most trafficked websites globally. For many people, ChatGPT was their first direct experience interacting with a large language model, and it played a central role in catalyzing the modern AI boom.

ChatGPT proved that AI advanced AI didn’t need to feel technical or intimidating. It could feel conversational, intuitive, even collaborative.

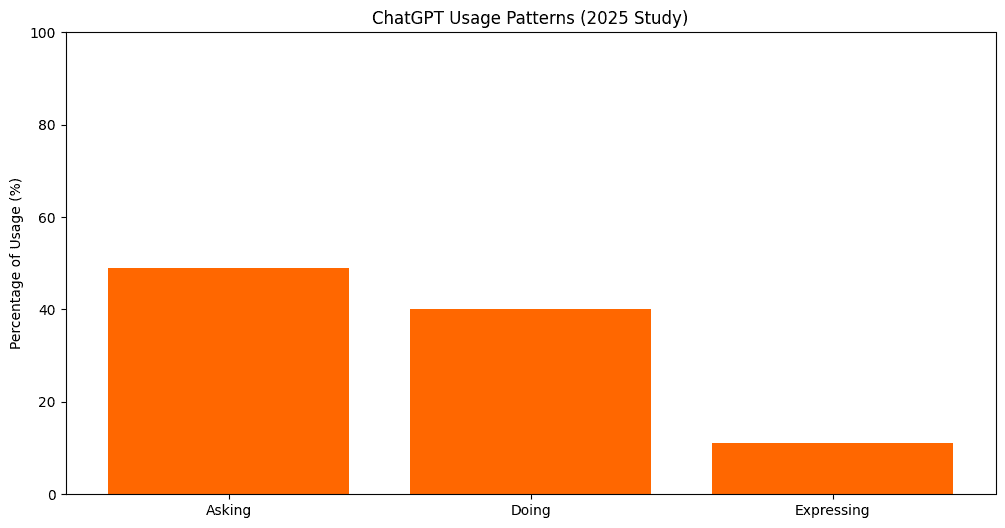

The chart below shows how ChatGPT usage broken down into three categories: Asking (49%), Doing (40%), and Expressing (11%).

Nearly half of interactions fall into Asking, with users seeking advice, explanations, or information, treating ChatGPT as an on-demand advisor. Doing represents task-oriented work such as drafting text, planning, or coding, where the model is used to generate outputs or complete practical tasks. Expressing, the smallest category, captures more exploratory or personal uses like reflection, creativity, and experimentation.

Bar chart generated using Chat GPT, based on 2025 Open AI research

Today, ChatGPT is still the most widely used LLM platform. It is considered a real Jack-Of-All-Trades, strong across writing, coding, and image generation.

But if you’ve arrived at this article, you’re likely exploring alternatives. You may be comparing models to see which performs best for your specific use case. Or you may have realized that generating text is only part of the challenge, and that the real opportunity lies in automating structured, multi-step workflows.

That’s where the search for alternatives begins.

Not All “ChatGPT Alternatives” Are the Same

When people search for “ChatGPT alternatives,” they’re usually comparing one of two very different categories:

Other LLMs (model-level alternatives)

AI platforms built on top of LLMs (system-level alternatives)

If you’re comparing LLMs, you’re effectively swapping one reasoning engine for another, and evaluating differences in context length, coding ability, pricing, safety alignment, or tone. That’s a model choice.

AI platforms and AI agents operate at a different layer. They combine models with orchestration, tools, integrations, and governance. Instead of just generating text, they embed reasoning inside real workflows. Platforms like V7 Go pair models with tools such as Python execution, web search, and system integrations, allowing agents to extract data, validate it, run calculations, update systems, and route exceptions within structured processes.

Importantly, these platforms often let you choose between models depending on the task, combining strengths rather than relying on a single engine.

You can see this illustrated below, and learn more in our blog, What Are AI Agents?

Category 1: LLM Alternatives to Chat GPT

If you are comparing ChatGPT to other large language models, you are essentially swapping one reasoning engine for another.

Common alternatives include:

Claude (Anthropic)

Learn more: Anthropic

Claude and ChatGPT are both frontier large language models, with different positioning and strengths. Claude was developed by Anthropic, an AI research company founded by former OpenAI researchers. Anthropic positions Claude as a safety-focused, enterprise-ready alternative to other frontier models.

Compared to ChatGPT, Claude does not offer certain product-layer features such as built-in image generation, a public marketplace for custom assistants, or an integrated Python sandbox for data analysis within the core chat interface. ChatGPT’s ecosystem is broader at the consumer-product level.

Where Claude differentiates itself is in depth and structure. One of Claude’s defining strengths is its large context window, which allows it to process very long documents (sometimes hundreds of pages) in a single prompt. This makes it particularly useful for document-heavy tasks like legal analysis, research synthesis, and long-form summarization. Claude is also known for its cautious tone and structured reasoning style, which appeals to regulated industries concerned about risk and hallucination.

It is available via API and through integrations with platforms such as Slack and enterprise knowledge tools.

Anthropic has also introduced Claude Code, a developer-focused environment designed to support software engineering workflows. Claude Code enables the model to interact more directly with codebases, assist with refactoring, debugging, documentation generation, and multi-file reasoning.

Anthropic Pricing

Claude is available to individuals for free, with paid tiers for heavier use: Pro at $20/month and Max at $100/month. For businesses, the Team plan starts at $25 per user per month ($30 month-to-month), with premium seats at $150/month that include the Claude Code developer environment. Enterprise pricing is custom and adds features such as SSO, audit logging, expanded context windows, and compliance APIs.

Gemini (Google)

Learn more: Google DeepMind

Gemini is Google’s flagship large language model, and integrated into Google’s ecosystem of products. It powers AI features across Google Workspace, Search, and Android, and is available to developers via Google Cloud.

Gemini’s strength lies in multimodality (it can process text, images, audio, and video well) and in its tight integration with Google’s data infrastructure. For organizations already embedded in Google’s enterprise stack, Gemini can feel like a natural extension of existing workflows. It also benefits from Google’s scale in infrastructure and research.

Compared directly with ChatGPT, the difference often comes down to ecosystem and emphasis. ChatGPT is often perceived as stronger in open-ended creative tasks and conversational fluency. Gemini, on the other hand, supports very large context windows in its advanced tiers and has strong web search capabilities.

Gemini Pricing

Gemini is offered in multiple tiers, including a free tier, and a number of consumer plans starting at $7.99 a month for Plus. For developers and enterprises, Gemini is available via API through Google Cloud’s Vertex AI platform. Pricing typically varies by model tier. Lighter “Flash” or general-purpose models are priced lower per million tokens, while higher-tier Pro models with larger context windows and stronger reasoning capabilities cost more.

LLaMA (Meta)

Learn more: Meta

LLaMA (Large Language Model Meta AI) is a family of open-weight models released by Meta. Unlike fully closed systems, LLaMA models can be downloaded, fine-tuned, and deployed within private infrastructure. This makes them attractive to organizations that want maximum control over data, customization, or deployment environments. LLaMA models are often used as the foundation for custom AI applications, internal copilots, and research experimentation.

Compared to ChatGPT, which offers a polished, ready-to-use interface with built-in tools and hosting, LLaMA is much more of a build-it-yourself option. It provides flexibility and ownership, but not the out-of-the-box experience. Deploying LLaMA typically requires engineering expertise and infrastructure management, so organizations choosing it are usually prioritizing control, customization, and self-hosting over convenience and immediate usability.

LLaMA (Meta) Pricing

Because LLaMA models are open-weight, pricing works differently. Unlike closed API models, LLaMA does not have per-token usage fees from Meta; instead, costs come from infrastructure, including GPU compute, hosting, scaling, and DevOps. Real-world enterprise expenses vary based on model size (e.g., 8B vs 70B), hardware requirements, and inference volume.

Mistral

Learn more: Mistral

Mistral is a European AI company known for producing high-performance, efficient open and commercial language models. Mistral’s models are often praised for achieving strong reasoning performance relative to their size, which can translate into lower infrastructure costs and faster response times.

Compared to ChatGPT, Mistral is less of a consumer-facing, all-in-one AI product and more of a flexible model layer for builders. Like LLaMA, Mistral models are frequently used in developer-centric environments where companies want to build AI systems tailored to their own needs.

Mistral positions itself as an alternative to large, centralized AI providers, offering both open-weight models and enterprise licensing options. For teams with strong technical capability, Mistral provides flexibility and cost control.

Mistral pricing

Mistral offers a free tier with usage limits, a Pro plan starting at $14.99 per month with higher limits and coding support, and a Team plan from $24.99 per seat per month for collaborative workspaces. API pricing typically ranges from roughly $2–$4 per million input tokens and $6–$12 per million output tokens for smaller models, and $8–$15 input and $25–$40 output for larger reasoning models, with enterprise discounts available.

Grok (xAI)

Learn more: Grok

Grok is developed by xAI, the artificial intelligence company founded by Elon Musk. It is integrated directly into the X (formerly Twitter) platform, giving it real-time access to public conversations and trends. Grok is positioned as a conversational model with a more informal tone and a willingness to address topics other models may avoid. Its differentiation lies partly in its integration with social data and its branding as an alternative to more tightly aligned models. While Grok is evolving rapidly, its current primary use case is conversational and informational rather than enterprise workflow automation.

Organizations considering Grok typically do so for exploratory or consumer-facing use rather than structured operational deployment.

Grok (xAI) Pricing:

Grok offers a free Basic tier with limited context and chat access, plus image and voice features. SuperGrok costs $30 per month, while SuperGrok Heavy at $300 per month provides a 256,000-token window and full Grok capabilities. For teams, a Business plan at $30 per seat per month adds collaboration tools and admin controls, with Enterprise pricing available on request.

Category 2: AI Platform Alternatives to Chat GPT

Not all ChatGPT alternatives are simply different language models. Some represent an entirely different category; full AI platforms built to operationalize AI inside real workflows.

Some are built around a single LLM, meaning the “brain” is fixed. Others are model-agnostic, allowing teams to choose the underlying LLM depending on the task, cost, or performance requirements.

V7 Go

Website: v7labs.com/go

Unlike ChatGPT, which is optimized for conversational text generation, V7 Go is designed to orchestrate multi-step workflows. It is an end-to-end automation platform built to power agentic workflows around document-heavy processes.

It comes with pre-built agents, such as invoice processing, lease abstraction, and financial document analysis, all fully configurable to your needs. Teams can also design bespoke agents tailored to their own internal workflows, approval chains, and reporting requirements.

On document processing benchmarks, V7 Go achieves 95–99% accuracy and supports context windows of over 1 million tokens, enabling it to handle complex, multi-document workflows without losing coherence.

Traceability is a defining feature. Every extracted data point is visually linked to its original source using bounding boxes, creating a clear, defensible audit trail. If an agent pulls revenue from page 47 of a CIM, you can click directly to the highlighted section. This dramatically reduces hallucination risk and makes the platform well-suited to compliance-heavy environments like finance, legal, and insurance.

V7 Go’s Knowledge Hubs connect agents to internal playbooks, policies, and reference documents. Instead of retraining models, teams provide structured access to existing institutional knowledge. As source documents evolve, the intelligence stays current.

Importantly, V7 Go is model-flexible. Teams can choose between different leading LLMs depending on the task; using smaller models for structured extraction, larger reasoning models for complex analysis, or switching models as performance and cost requirements evolve.

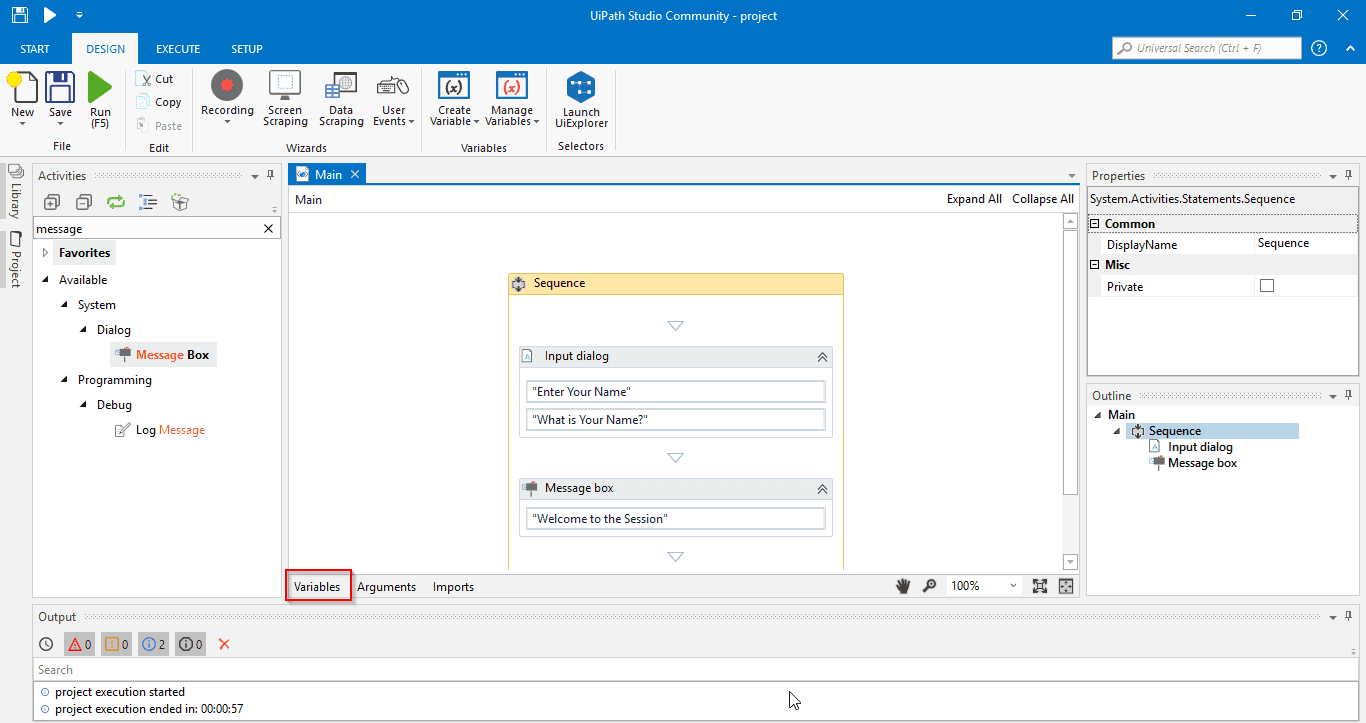

UiPath

Website: uipath.com

UiPath is a global software company founded in Bucharest in 2005. It began as a robotic process automation (RPA) company, focused on automating repetitive, rules-based tasks across enterprise systems. Its early strength was mimicking human actions, like clicking buttons, copying data between systems, and executing structured workflows without changing underlying software.

In recent years, UiPath has evolved beyond classic RPA into what it calls Agentic Automation. This combines traditional automation bots with AI models, document understanding, process mining, and orchestration tools. The idea is to move from purely deterministic automation (if-this-then-that) to systems that can incorporate reasoning, unstructured document analysis, and human-in-the-loop review.

Technologically, UiPath integrates LLMs into its broader automation stack. Its platform includes document processing, API integrations, workflow designers, governance controls, and enterprise deployment tooling. The AI layer enhances decision-making inside workflows that were previously rigid.

UiPath remains a powerful but complex enterprise platform. Its roots in RPA mean workflows can still feel structured and engineering-heavy compared to newer AI-native systems. Implementation often requires dedicated automation teams, governance frameworks, and longer deployment cycles.

Automation Anywhere

Website: automationanywhere.com

Automation Anywhere, like UiPath, originated in the robotic process automation space. It was founded in 2003 as a platform around automating repetitive business processes, especially in finance, HR, operations, and customer service. Its core strength historically lay in bot-based automation integrated across enterprise systems.

In recent years, Automation Anywhere has leveraged LLMs to extend traditional bot automation into more cognitive tasks, incorporating machine learning, intelligent document processing, and generative AI capabilities into its platform. Its technology stack includes process discovery tools, bot orchestration, document extraction, API integrations, and governance frameworks. The goal is similar to UiPath’s: combine structured automation with AI-driven reasoning.

Automation Anywhere emphasizes enterprise scalability, security, and compliance. It often integrates into large, system-heavy environments where automation needs to coordinate across ERPs, CRMs, and legacy applications.

Like other legacy automation platforms, Automation Anywhere can require significant setup and configuration. It excels at orchestrating complex enterprise workflows but may feel heavyweight and less modern than more recent AI-native solutions.

Hebbia

Website: hebbia.com

Founded in 2020 by George Sivulka, Hebbia is a specialized AI platform built for knowledge workers in finance, legal, and consulting. Its core product — often referred to as Matrix — leverages a sophisticated multi-agent architecture to decompose complex research questions and execute them across large document sets in parallel.

Analysts can run structured queries across hundreds of filings, reports, or diligence materials at once, receiving answers grounded directly in the underlying source documents. This makes Hebbia particularly well-suited to high-volume, research-intensive environments where cross-document reasoning and citation are critical.

Hebbia also provides pre-built extraction templates for common private equity workflows, including CIM analysis, contract review, and financial statement extraction. These templates are configurable, enabling firms to tailor fields and outputs to their specific investment criteria and diligence frameworks.

Because the platform is optimized for deep research and dataset interrogation, it can be more complex to deploy for broader legal operations or general-purpose workflow automation. Its core strength lies in structured analysis at scale, rather than full end-to-end process orchestration across systems and teams.

Rossum

Website: rossum.ai

Rossum is a cloud-native document automation platform focused on transactional workflows, particularly accounts payable. Its core strength lies in template-free data extraction, designed to handle highly variable invoice layouts without requiring rigid rule-based configuration. This makes it well-suited to environments where invoice formats differ significantly across vendors.

One area where Rossum stands out is line-item extraction, which is historically a weak point for many Intelligent Document Processing (IDP) systems. Accurately capturing quantities, unit prices, tax breakdowns, and totals across diverse invoice structures is technically complex, and Rossum has invested heavily in making this reliable at scale.

Beyond extraction, Rossum is designed to sit directly inside operational finance workflows. It integrates with ERP systems through APIs and prebuilt connectors, enabling processed invoices to flow automatically into downstream systems.

The trade-off is scope. Rossum is highly optimized for transactional document automation, particularly invoices, rather than broader knowledge work or multi-step agentic workflows. It excels at structured data capture and ERP integration but is not positioned as a research platform or a general-purpose AI reasoning system.

Harvey

Website: harvey.ai

If you're a lawyer, you've likely heard of Harvey, the AI platform built specifically for legal professionals. Rather than positioning itself as a general-purpose chatbot, it is designed to support core legal workflows such as contract analysis, legal research, drafting, regulatory review, and due diligence.

The platform embeds legal reasoning directly into structured review processes. It integrates with document management systems and legal repositories, allowing firms to analyze large volumes of contracts and case materials within their existing environments. Capabilities include clause-level analysis, redlining, risk identification, comparison across agreements, and structured outputs aligned to how legal teams actually review documents.

Harvey is highly focused on legal use cases rather than cross-functional document automation or broader enterprise workflows.

Discover the best Chat GPT alternative

The reality is that there is no single “best” alternative, only better alignment between tool and task. If you need fast drafting, brainstorming, or lightweight analysis, a general-purpose LLM like ChatGPT (or its model peers) may be more than sufficient. If your work depends on querying thousands of documents with citation-level accuracy, research-focused platforms may be a better fit. And if the goal is not just answering questions but automating structured, multi-step workflows across systems, then agentic AI platforms offer capabilities that standalone chat interfaces simply weren’t designed to provide.

V7 Go allows users to build powerful automated workflows using Chat GPT, Claude, Gemini and more. If you want to learn more about how we can help you do what Chat GPT alone won't allow, chat with our expert team.