Changelog Darwin

SAM 3: Text-Prompt Segmentation and Enhanced Auto-Tracking

SAM 3 is now live in V7 Darwin and brings significant improvements to both image segmentation and video annotation workflows. This major update introduces text-based automatic detection, higher accuracy segmentation, and enhanced auto-tracking capabilities powered by the latest SAM architecture.

What's New

SAM 3 can be used like the regular SAM you're familiar with. You can manual select objects for segmentation with a single click. Behind the scenes, SAM 3 preprocesses your image and creates polygon masks for all detected objects with improved accuracy compared to SAM 2.

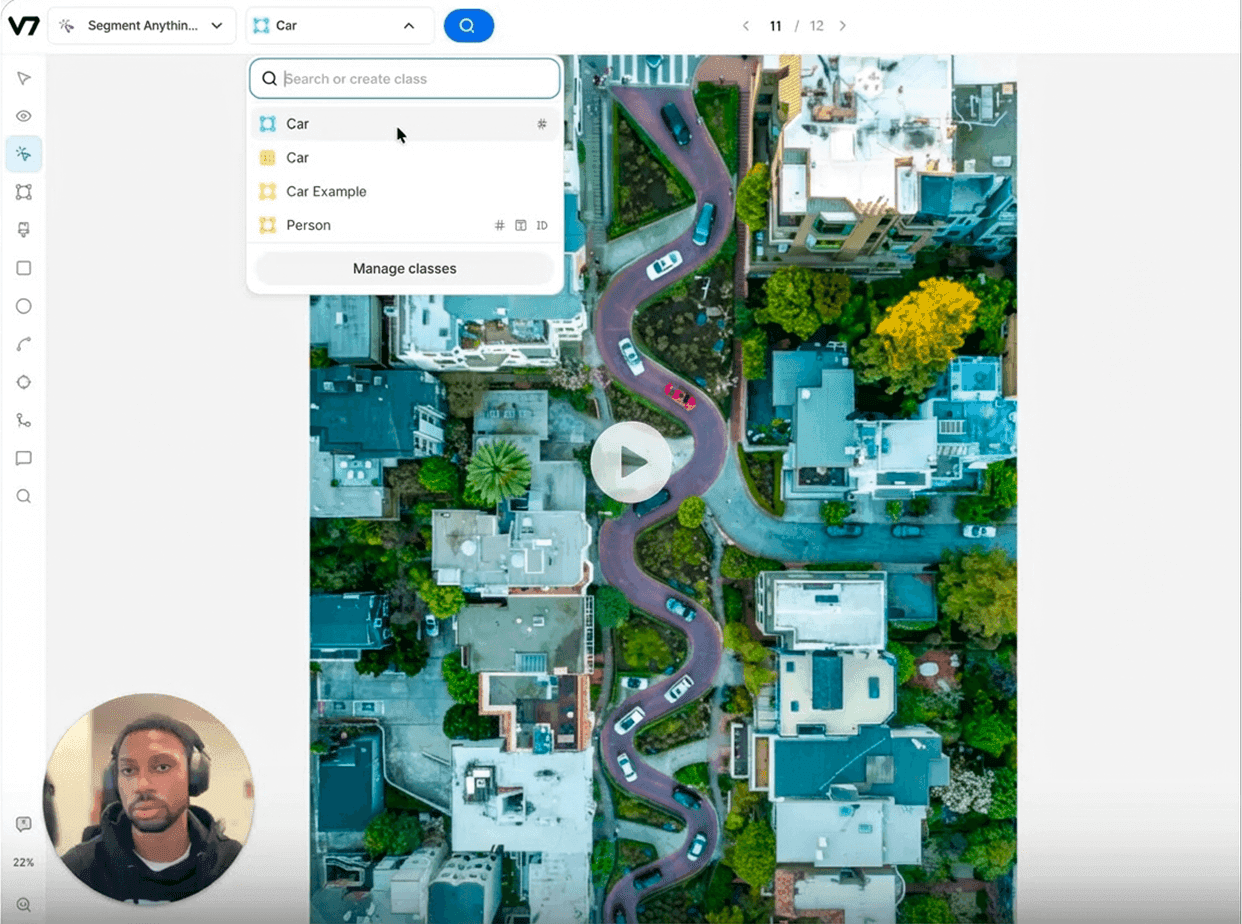

The key breakthrough is automatic class detection based on class names. If you create a class called car, train, bicycle, or any other object type, you can now select it. Click the magnifying glass (Search) icon in the Darwin UI, and SAM 3 will automatically detect all instances of that class and segment them accordingly. The updated model supports approximately 4M unique concept labels.

How Class Detection Works

The detection uses the class name as a text prompt. If you name a class "player" and search a tennis match, SAM 3 finds and segments all players. Name another class "light pole" and search again to segment those separately. The class name serves as the prompt.

After detection, you can refine results manually. Delete false positives, adjust boundaries, or add missed instances using standard SAM point-and-click selection.

The video above shows both methods in action: the manual selection of a single object and the automatic selection of all instances based on the class name (these two can be used independently).

Learn more about using SAM 3:

The Benefits

Labeling datasets with hundreds of similar objects (cars on a parking lot, berries on a bush, bottles on a conveyor) used to mean clicking each one individually. SAM 3's text-based detection handles the bulk of that work in a single action. Combined with auto-tracking, you can annotate a video of pedestrians or a crowded retail floor in a fraction of the time. The accuracy improvement in tracking also reduces correction work. Fewer lost tracks mean fewer frames where you need to re-anchor an annotation or fix identity swaps.

Feb 4, 2026

Improved Dataset Organization with Custom Labels

You can now create custom Dataset Labels and assign them directly from the dataset settings page. If you have Workforce Manager permissions, you can manage these labels centrally. Editing a label’s name or color updates it instantly across all datasets. You can also update labels via the API.

Use labels to filter datasets, group them for a clearer overview, and quickly find what you need. Your filter and grouping preferences remain active for the duration of your session.

Labels are shared across your entire workspace to ensure everyone uses consistent terminology.

May 15, 2025

Split and Unify Video Annotations

Correcting video annotations in V7 Darwin is now more straightforward with new Split and Unify functionalities. These tools help you divide a single annotation across frames or merge multiple annotations into one. It is especially useful for fixing model outputs (like Auto-Track) or for instance swaps.

To split an annotation, select it, then use the Scissors icon or the Ctrl + S hotkey. The annotation divides at the currently selected frame. All properties and the original instance ID are copied to both resulting annotations. The newly created segment gets a new annotation ID. This is helpful when an object was incorrectly tracked as a single instance.

To unify two annotations, Cmd + click to select them in the canvas or timeline. Then, right click and choose "Unify" or use the Ctrl + M hotkey. This merges them into a single annotation.

Learn more: Managing Annotations with Merge, Subtract, Split, and Unify Tools

May 12, 2025

Timeline Summary Mode for Video Annotation

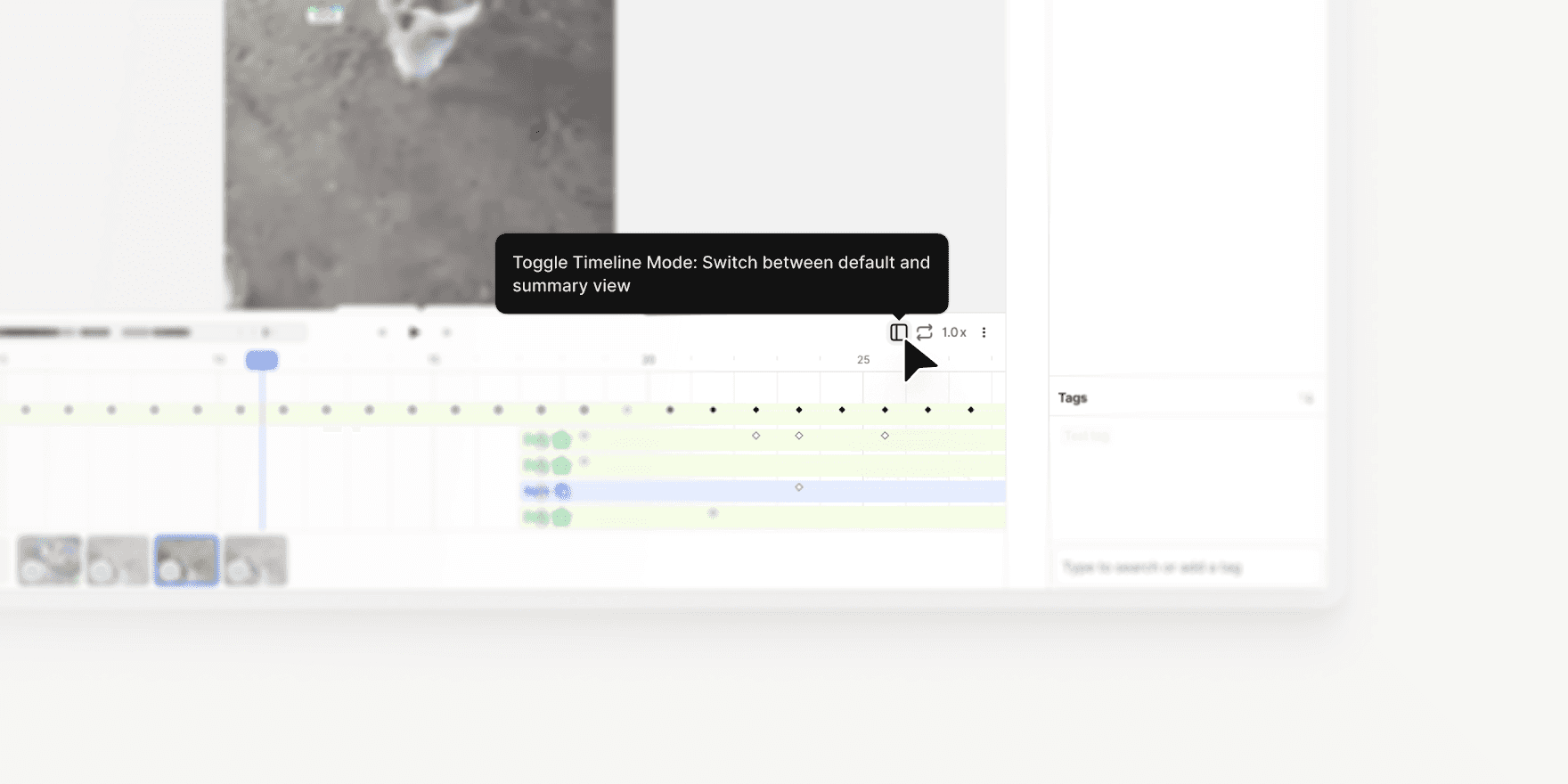

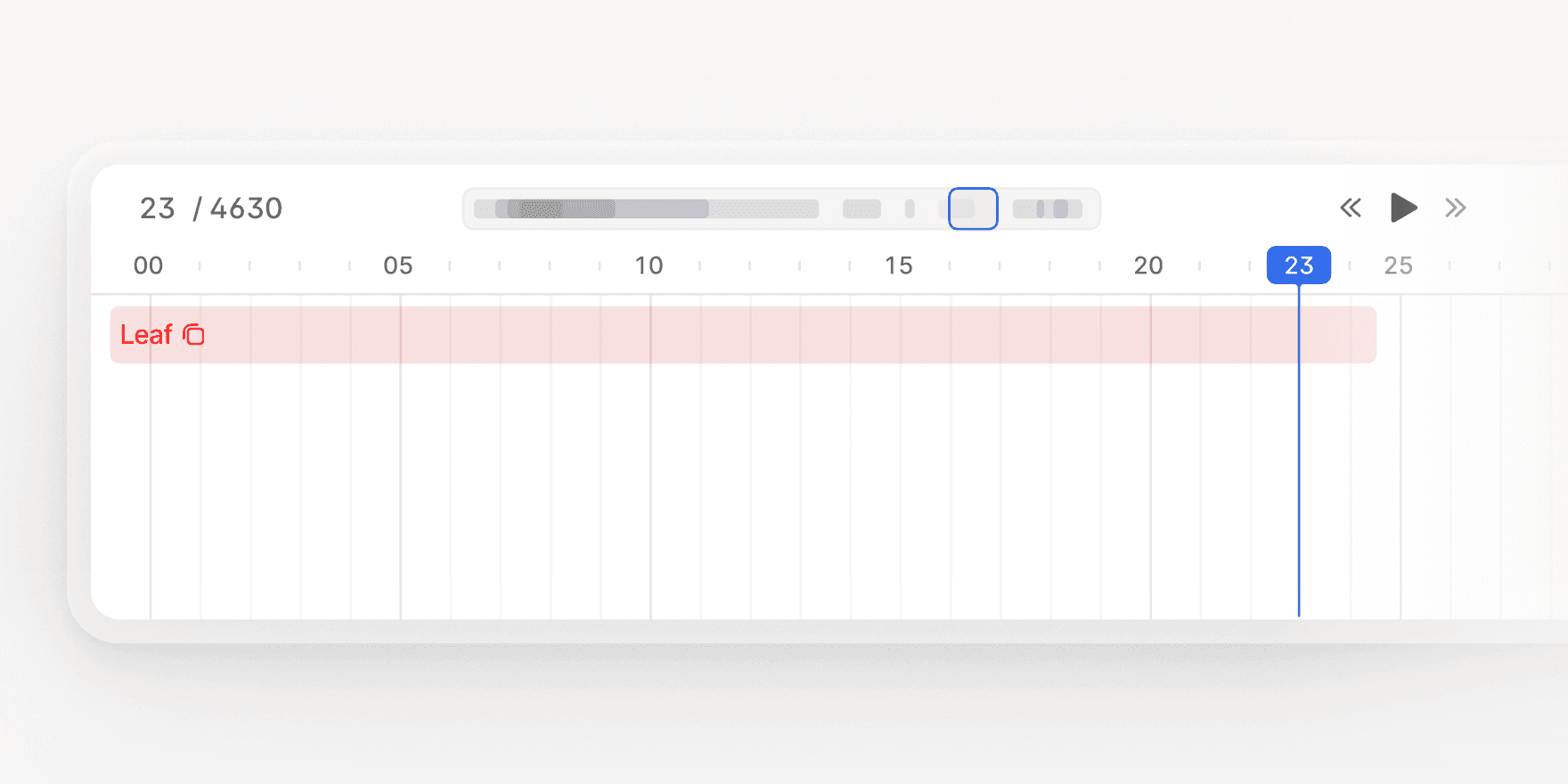

V7 Darwin now includes Timeline Summary, a new feature designed to give you a high-level overview of your video annotations. With the timeline mode enabled, you can quickly review annotation coverage, navigate large files, and streamline your review process.

Access with Shift + D: Open Timeline Summary Mode using the new button on the right-hand side of the interface or the hotkey Shift + D.

Summary by Class: Annotations are grouped by class on the left panel. Expand any class to view individual annotations and their frame ranges.

Click to Jump: Click once on an annotation to navigate to it, or double-click to open it in the regular timeline.

Read-Only Mode: The summary timeline is read-only to prevent accidental edits. However, you can continue to edit annotations in the canvas and layer bar.

Clean View: Keyframes and detailed elements are hidden from the summary view, providing a clear, scannable overview.

Heatmap Overview: A heatmap at the top of the timeline shows annotation density across the video, with the zoomed-in section highlighted in blue for easy orientation.

Timeline Summary Mode makes it easier to navigate, audit, and review video annotations, especially for large or complex files.

Learn more: V7 Darwin Documentation

Apr 25, 2025

Flood Fill Tool Now Available in V7 Darwin

This update lets you annotate volumetric regions in CT/MRI scans based on intensity similarity of connected voxels or pixels.

You can adjust tolerance levels and create annotation masks in just a few clicks. It works similarly to the flood filling functionality of Slicer 3D or to magic fill/paint bucket tools in image editing software like Photoshop. If you are familiar with these tools, you’ll feel right at home.

Key functionalities:

Switch between 3D or 2D fill for voxel or pixel-based masks

Adjust tolerance level to label similar regions automatically

Combine Flood Fill with Brushes for the highest level of control

The tool supports Mask-type annotations and is available for all relevant medical imaging files, like volumetric DICOM and NIfTI files. It will display on your annotation tools panel automatically if the file supports it.

Mar 11, 2025

Advanced Filters Now Support Text Properties

Dataset filtering options available in V7 Darwin have just been upgraded. You can now search the full content of text properties to identify whether specific words or phrases have been mentioned. For example, it is possible to automatically generate descriptions of images with AI models and then use them for sorting and filtering files.

You can combine text search with other dataset filters

The search engine for filtering is case-insensitive and will find all instances of a word

To search for multiple words or phrases, add them as multiple filters and set up and/or conditions (you can combine up to 20 filters)

The searched text string should be between 3 and 1000 characters.

This functionality is especially useful for navigating large datasets and pinpointing specific items.

Feb 10, 2025

Item Properties in Logic Stage

You can now use item-level properties as conditions inside any Logic Stage in V7 Darwin. This feature introduces new conditions that let you set up parallel branches in your annotation workflows. For example, if a property is set to a specific value or is missing, an item can be routed back to a previous annotation stage or moved to a custom review stage.

New Conditions:

Verify whether an item property is defined (Item Property is Set/Is Not Set)

Evaluate if an item property contains specific values (Item Property Is Any Of/Is None Of)

To use this functionality, configure the desired properties in your class management tab and then open the workflow editor to add or modify Logic Stages.

Feb 6, 2025

Audiowave Visualization

V7 Darwin now supports Audiowave Visualization. This new feature allows you to overlay an audiowave onto the video timeline. This feature is ideal for use cases such as conversation analysis. You can visualize audio patterns while labeling conversations, which makes the process more intuitive and accurate.

Toggle an audiowave overlay on the video timeline with Shift + A

Use Ctrl + mouse up/down to amplify audio segments for improved clarity

Navigate with smooth playhead movements instead of frame-by-frame jumps

This feature currently supports audio extracted from videos and does not work with audio files. Labels remain tied to video frames rather than specific audio segments, ensuring alignment with existing video-based workflows.

Feb 5, 2025